Are you an app developer who is struggling with navigation data? I’ve been part of this field and have seen several issues that arise, but there are certain things and tools you can use to get past it, like Google Maps scraping.

But why is location metadata necessary? According to Google Help, “Location Accuracy data is used to validate the accuracy of maps-based information, such as building entrances or routing directions.”(How Location Accuracy Improves Location)

In this article, I’ll you about time-saving methods for developers to acquire location information. It’s an integral write-up, so read carefully.

In this modern world, it’s essential to have geographic information and data. It’s necessary to have all the mandatory logistics, mapping applications, and analysis. You can get help from a Global Positioning System, as it helps businesses in several ways.

Like analyzing their customer’s buying patterns, location mapping, and finding the best delivery routes. Developers can enhance the user experience by improving their exciting projects, as proper geographic content extraction is important.

A thoughtful approach is significant for gathering and managing map data effectively as it ensures compliance, privacy, and reliability. Here are some best practices:

You should always know the source of your location, that is, where is it coming from. GPS, cell tower signals, or Wi-Fi triangulation. Credibility and legitimacy are also valuable, and information extraction should be on point.

The quality of data directly affects app performance, and the app needs to be reliable. You can cross-check your findings from trusted sources.

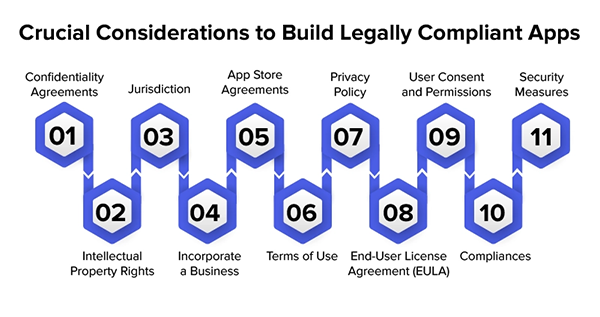

You should always follow your local and state laws and maintain legal standards. GDPR and CCPA have strict roles related to handling personal information, and it does include location statistics.

Be clear with why you need the material and what are you going to do with it, and be transparent to create trust. Ask for the user’s permission and if they deny it, be fine with it. Protect privacy at all costs, as a violation can lead to serious legal trouble.

Web scraping and API integration, are data extraction techniques and can be used by makers of software. This is to acquire coordinates from several sources. For fast performance, indexing methods and compression techniques are ideal.

Developers do have various tools that they can use to get details about your location, as it helps them manage files efficiently. They can reduce content size to make search within the database faster and smoother.

Geocoding is the best way to receive the exact coordinates, consisting of the longitude and latitude of any place. But there’s also reverse geocoding, which can pinpoint the actual street address with the help of those coordinates. It’s quite accurate.

But just to be on the safe side, developers usually check it regularly to see if it’s reliable or not, so if there are any issues they will solve them immediately. Scalability is another crucial aspect, as it plays a major role in processing and saving geographic data.

Your system should be able to handle the growth of stored information and maintain its accuracy, and this could be done by programmers if they design the data extraction process to grow smoothly.

Google Maps Scraping is a great tool for developers, it helps them harvest relevant information from Google itself. You can find facts like contact details, customer reviews, and business addresses.

For building maps, understanding market trends, or building business directories, such detail is important. You always get the latest and updated data which can help you with different purposes.

However, it needs to be used responsibly and ethically. As mentioned earlier, misuse of personal information can cause several issues for you, so be careful with how you handle it.

PRO TIP Implement different privacy techniques to further enhance user privacy!

In this article, I told you why it is crucial for developers who are creating location-based applications to be accurate and manage records responsibly with their software. It’s good that you want to create an easy-to-access program.

But make sure to meet every legal requirement, so that you don’t get in trouble. Data theft and misuse is a big thing in this modern world. And punishments could be quite brutal for it. So, be careful, and all the best.